Is your teen spending too much time on Instagram? Here are Meta’s new tools to protect their accounts

Representative image to illustrate internet safety tools for teenager | Screenshots of dummy account provided by Meta/Instagram

Representative image to illustrate internet safety tools for teenager | Screenshots of dummy account provided by Meta/Instagram

Instagram has introduced a new set of safety tools for its youngest users in India. The platform’s “Teen Accounts” protections, now officially live, are designed for users under the age of 16. These changes make teen accounts private by default, limit who can message them, and give parents better ways to stay involved in their child’s online experience.

The update is part of Meta’s larger effort to make Instagram a safer space for teenagers. It was first announced on Safer Internet Day earlier this year and was reaffirmed in Meta’s July 2025 safety update. The rollout comes at a time when concerns around online safety, especially for children and teens, are growing rapidly in India, one of Instagram’s biggest user bases.

Privacy first: what’s new for teens

With the new rules in place, all accounts created by users under 16 are automatically set to private. This means only approved followers can see their posts or tag them. Direct messages are now restricted too, so strangers or people who don’t follow the teen can’t start a conversation.

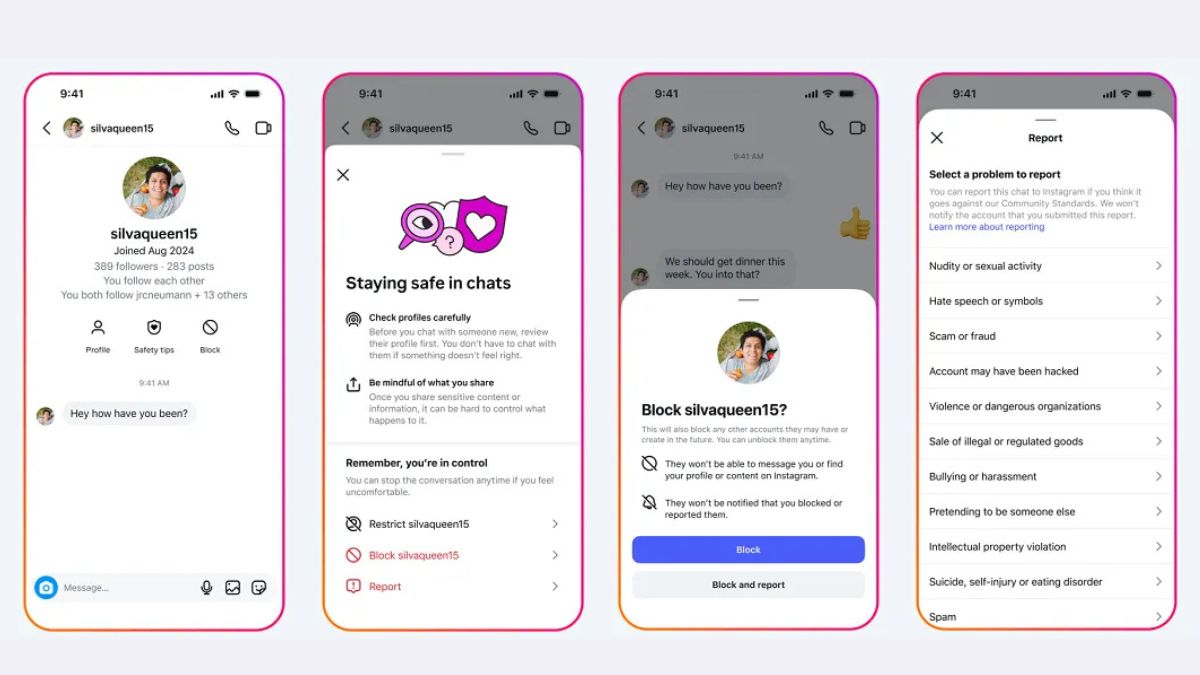

To help teens make safer choices, Instagram has added alerts in chats that give more context about who they’re talking to. For example, users can now see when an account was created or whether it’s based in another country. A new one-tap tool also makes it easier to block and report someone at the same time.

Other features, like going Live or turning off message filters that blur sensitive images, now require parental approval. There’s also a new “Sleep Mode” that mutes notifications between 10 pm and 7 am. If a teen spends more than an hour on the app, they’ll get a gentle reminder about screen time.

In an official statement, Meta said: “We’ve added new safety features to DMs in Teen Accounts to give teens more context about the people they’re chatting with. They’ll see safety tips, account information, and have a combined block-and-report option—all designed to help with safer decisions in private chats.”

Parents get more control

To help families stay involved, Instagram has launched a new parental dashboard. Through it, parents can keep an eye on who their teen is chatting with, approve or change safety settings, and track how much time their child is spending on the app.

These new features come alongside a broader crackdown on harmful behaviour across Meta’s platforms. The company says it recently removed over 6.3 lakh accounts from Instagram and Facebook for violating child safety policies. Of those, more than 1.35 lakh accounts were caught posting inappropriate comments on content involving children.

Meta also reported that in June alone, teen users blocked over 1 million accounts and reported another 1 million after seeing in-app safety notices. These warnings now appear when a teen is messaged by an account that seems suspicious, such as one that was just created or located in another country.

This update signals a real shift in how Instagram is thinking about child safety. Instead of expecting teens or their parents to go hunting for the right settings, these protections are now baked into the app experience from the start.

With so many young users in India, the impact of these changes could be significant. It also reflects a bigger change across the tech world, where platforms are being pushed to put user wellbeing—especially for teens—at the heart of how their apps work.

Will this be enough to fully protect young users? That remains to be seen. But for now, many Indian teens and their parents may feel a little more at ease knowing that Instagram is finally taking their safety seriously.

Sci/Tech