Nvidia ushers in new era of AI with Grace Blackwell chips at COMPUTEX 2025

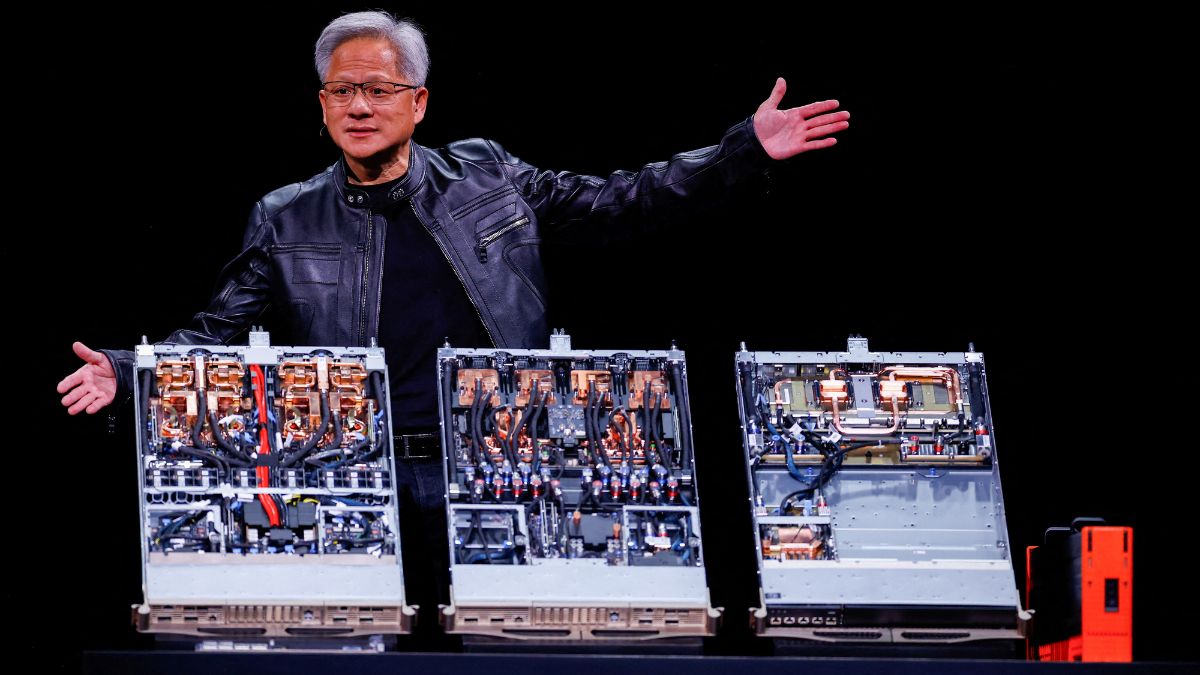

Nvidia CEO Jensen Huang delivers the keynote address at COMPUTEX 2025 in Taiwan | REUTERS

Nvidia CEO Jensen Huang delivers the keynote address at COMPUTEX 2025 in Taiwan | REUTERS

At COMPUTEX 2025 in Taiwan, Nvidia CEO Jensen Huang unveiled what may be the most powerful AI processor architecture to date, the Grace Blackwell NVL72 chip. Positioned as the centrepiece for Nvidia’s future, this hybrid chip design is redefining how AI models are trained and scaled.

The Grace Blackwell chip fuses Nvidia’s strengths in GPU acceleration with high-performance CPU processing, in a tightly fused package. It is designed to accommodate the most demanding works as it includes a trillion-parameter large language model, real-time inference engines, and next-generation scientific simulations.

The chip is built using an advanced 4nm process and using Nvidia’s latest chiplet design, Grace Blackwell chips offer significant improvements in power efficiency and enable more compact and cost-effective AI systems.

The chip also reduces memory Bottlenecks, increases bandwidth, and avoids delays—three important performance factors for modern AI and HPC (high-performance computing) tasks. Huang announced the new technology as “the engine of the AI factories of the future” and emphasised how this is ‘not just in chip design’, but in how ‘AI infrastructure is conceived’.

A next-generation platform built around the Grace Blackwell architecture is the GB300 AI system, announced at COMPUTEX 2025. Set to debut in Q3 2025, GB300 is expected to have the potential to be the new backbone for hyperscale AI.

It uses cutting-edge CPU and GPU technology to supercharge the development and deployment of large-scale AI models. Tech giants like Microsoft and Amazon hinted at adopting the new system to power their AI data centres.

Another major announcement was the extension of NVLink Fusion, Nvidia’s high-speed interconnect technology, to partner with outside companies. Industries like MediaTek, Marvell, and Qualcomm will integrate NVLink Fusion into their processors and accelerators. This approach will create an ecosystem that allows a flexible, consistent AI infrastructure with Nvidia acting as the powerhouse.

As the keynote came to a close, Huang introduced Blackwell Ultra, a future iteration of the chip coming in 2026, followed by Rubin and Feynman architectures by 2028. Nvidia also introduced the DGX Spark, a compact AI workstation designed for researchers and startups, alongside expanded collaborations with global chipmakers to co-develop custom AI semiconductors powered by Nvidia technology at COMPUTEX.

In addition to these advancements, Nvidia is expanding its AI ecosystem through partnerships and software platforms. The company even revealed a new software known as Lepton, intending to centralise and streamline cloud-based access to Nvidia GPUs for developers and cloud providers.

Jensen Huang’s COMPUTEX 2025 keynote was more than just a product launch. It can be seen as a declaration of Nvidia’s important role in the upcoming era of AI infrastructure.

Business